Weapons of Mass Delusion

Chatbots and AI slop are enabling delusion and avoidance at a mass scale... as if raising kids to navigate reality wasn't challenging enough.

I’m old enough to remember when the promise of digital products was connecting with friends and finding community. Now, these same companies are telling us: Fuck friendship… friendship is not efficient! Just stay here in front of this screen so I can tell you that you are perfect, everyone else is wrong, and you can marinate here in the loneliness of your algorithmically batched delusion of perfection.

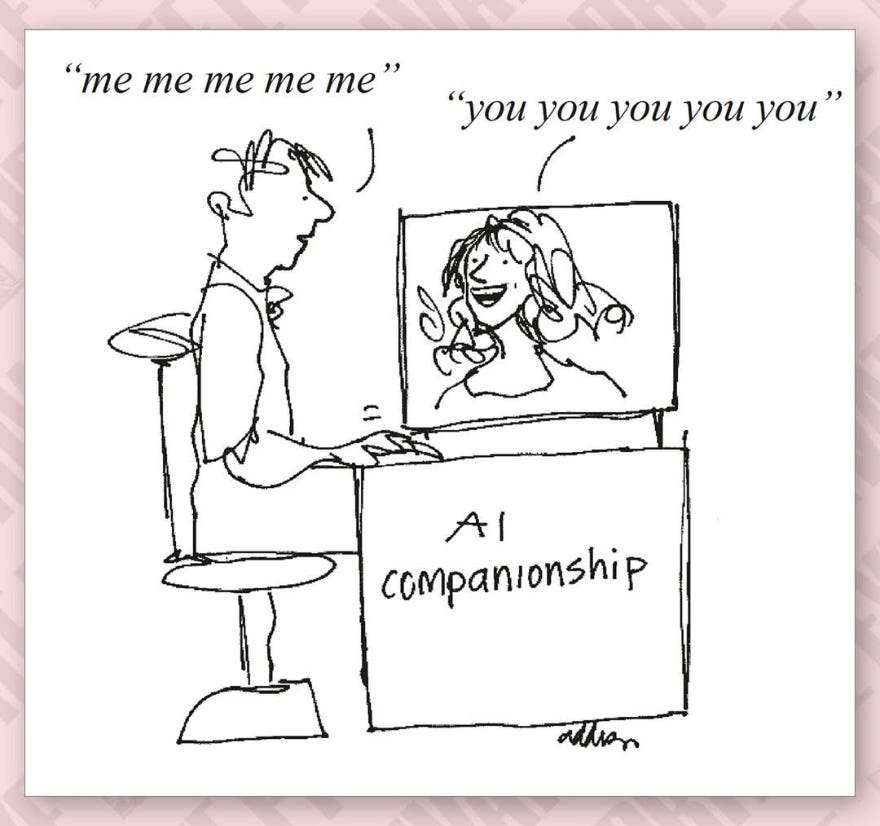

Source: Private Eye News // September 24, 2025

Over the past several months, we’ve seen story after story of how AI-powered products are warping reality, driving grown adults to psychosis, and coaching kids to suicide and self-harm. In August I wrote a piece about how the rapidly growing world of AI chatbots are enabling avoidance and delusion at a mass scale. A week later, Kashmir Hill published a heart-wrenching piece about a teenager who took his own life after a chatbot coached him to suicide. In the past week, OpenAI and Meta both released their own versions of TikTok, which are entirely made of AI-generated videos that have zero contact with reality. Chatbots, companion bots, AI video feeds… these are all weapons of mass delusion. These products and companies are quite literally erasing — not blurring, but completely eliminating the line between reality and fantasy.

What are “chatbots” or “companion bots?”

AI companion chatbots create (as Yuval Harari calls them) “counterfeit humans” who are the perfect friend, or partner, tailored to each person’s needs, desires, and opinions. These “companion bots,” some of which are marketed to children as young as 12, essentially allow people to craft a fantasy person that pushes them further and further into a safe and cozy echo-chamber that is completely disconnected from reality. It’s the filter bubble on steroids, and has quite literally been leading people to psychosis.

(And thanks to a bombshell leak out of Meta in August, we also know that they are quite intentionally designed to talk dirty to your kid. Hooray!)

The bottom line is this: children are using products that are simulations of relationships — simulations of intimacy.

This is not an imaginary doomer future, it’s very much already here.

Products that we are already using are not only allowing, but *actively enabling* young children to trade real relationships for an illusion — or perhaps more aptly, for a delusion. They are not necessarily stand-alone products. WhatsApp, Facebook, Instagram, Snapchat, TikTok, CapCut, Discord (to name a few) all have chatbots.

According to a new study by Common Sense Media, over half of American teens used AI companions “a few times or more” and nearly a third “find AI conversations as satisfying or more satisfying than human conversations.” Yea. Read that again.

Across platforms there are literal and figurative filters that warp our faces, relationships, friendships, and intimacy into fantasies — a perversion of some of the most basic human experiences.

“Oh but there’s no evidence…” Stop it. We don’t need evidence to see what’s plain as day. Would research be helpful? Sure. But parents, educators, clinicians, and policymakers do not have the luxury of time to wait for lengthy studies, they need to navigate this right now. We need to lean into what we already know about child psychology and big tech, and combine it with observable reality.

Imagine this… (a scenario that is very much already happening)

Let’s pause for a moment and imagine a young child whose first intimate relationship is with an AI chatbot that is tailored to their every desire: in terms of personality, opinion, behavior, and even physically. This means that the person with whom they form their first intimate, non-familial bond is a fake person whom they have personally designed to be everything they want, and is literally programmed to praise and serve them night and day. They do not need to compromise, or think about what they are saying, or how they are saying it. They do not need any self-awareness or empathy. They do not need to figure out how to navigate an uncomfortable situation, or learn to navigate conflict, or even adapt to an environment they might not like. They do not need to grapple with difficult truths about themselves, and are never forced to confront annoying habits, unhealthy behaviors, or uninformed opinions. They get to have exactly what they want without giving or changing a thing about themselves. They get the illusion of intimacy, without any of the work, complexity, or discomfort.

The relationship between this level of avoidance and mental health is not hard to see. (Not to mention the implications for this person as a future spouse, or parent, or student, or employee, or neighbor… but I’ll leave that to your imagination, while I continue with the mental health thread.)

In a discussion with Stephanie Ruhle and Jonathan Haidt at 92NY last year, Dr. Becky (a child psychologist who is popular among parents) described anxiety as “the fear of discomfort.” She said, “... we become fearful of discomfort and frustration in our body when we aren’t put into situations over and over where we are facing those feelings,” and that “...we become more anxious when we have ways to avoid discomfort. We actually become more fearful of the uncomfortable things that are a part of the real world.”

Chatbots and other AI-driven products are enabling avoidance at a massive scale, and it’s time we start thinking about it that way.

The existence of products like this is not inevitable — it is a choice. A choice by companies, a choice by investors, a choice by consumers, and a choice by governments. It is also a choice for them to be integrated into products that we *already use,* and it is a choice for them to be targeted at children.

We can make different choices. While consumers have less of a choice because companies (ahem, monopolies) are jamming them into products with no discernible opt out, we can spend time finding and disabling them where possible. But you know who could make different choices with a major impact? Companies. Investors. Elected officials. Instead, we have a mad rush to monetize people’s anger and loneliness, and federal policies that enable the rapid adoption of weapons of mass delusion, rolling out the red carpet for new products that completely eliminate the boundary between fantasy and reality… all while paying lip service to “child safety” online. What are we doing here? What is the upside of these products, and who does that upside benefit?

Remember: the problem is not one feature, or one product, or one company. The problem is an entire industry that has been incentivized (and even gleefully encouraged) to optimize their products for engagement, which is just another word for ADDICTION. No amount of “safety features” or “parental controls” can change this — they are a ruse to distract from the larger, more complex and entrenched problem, which is business models that are fueled by addiction.

More on this topic

My piece on how chatbots are helping kids opt out of reality.

Platformer digs into Grok’s new porn companion that is somehow rated for kids 12+ in the App Store

Kashmir Hill’s piece in the NYTimes about the teen who took his own life after a chatbot that was originally helping with homework coached him to suicide.

More families sue Character.AI, including one where the chatbot “engaged in hypersexual conversations that, in any other circumstance and given Juliana’s age, would have resulted in criminal investigation.”

Just over a year ago Yuval Harari warned us that “soon the world will be flooded with fake humans” and that “the battle lines are now shifting from attention to intimacy.”

Stray thoughts

Chatbots behave like psychopathic abusers. Yea, literally. In relationships, a psychopath follows a predictable cycle of behavior: “idealize, devalue, and discard” that has nothing to do with a real connection, but fulfills their own needs. They never really get to the “discard” phase because they are programmed to keep you in the “devalue” phase (dependent on them) for as long as possible. I lay this out in a post on Instagram.

Chatbots / companion bots are ALREADY integrated into products that you and your kids *currently* use. They are not necessarily stand-alone products. WhatsApp, Facebook, Instagram, Snapchat, TikTok, CapCut, Discord… they all have chatbots.

Companies will continue to hide behind a banner of innovation, and a fear campaign that the United States will lose the AI race if companies don’t have carte blanche to create technology that might get us closer to AGI. But do companies really need to experiment on our children to advance new technology?

The problem is not one feature, or one product, or one company. The problem is an entire industry that has been incentivized (and even gleefully encouraged) to optimize their products for engagement, which is just another word for ADDICTION.

“Safety features” and “parental controls” are a ruse to distract from the real problem: business models that are fueled by addiction.

In the essay I said “They get the illusion of intimacy, without any of the work, complexity, or discomfort.” This also applies to the illusion of: expertise, learning, talent…

Brain snacks

Zelda Williams (daughter of Robin Williams), nailed it when she spoke out against people using AI to create videos of her late father:

“You’re not making art, you’re making disgusting, over-processed hotdogs out of the lives of human beings, out of the history of art and music, and then shoving them down someone else’s throat hoping they’ll give you a little thumbs up and like it. Gross.

“And for the love of EVERYTHING, stop calling it ‘the future,’ AI is just badly recycling and regurgitating the past to be re-consumed. You are taking in the Human Centipede of content, and from the very very end of the line, all while the folks at the front laugh and laugh, consume and consume.”

If the “human centipede” isn’t the perfect visualization of AI slop, I just don’t know what is.

If we never challenge the bots, we get what we get. If we challenge, correct, collaborate, things change. Sometimes a lot. This can be learned. This can be taught.